EMO work alignment using musical content

EMO work-alignment using musical content

Starting point is two sources of data:

- Early Music Online (EMO) cataloguing metadata for (complete) books, each of which generally consists of several vocal part-books. This includes item-sequences (with composers where known), which we assume to be in the same order within each of the part-books (is this safe?), though all items from a book do not always have the same number of voices.

- Music Encoding Initiative (MEI) files output by Aruspix after processing separated page-images. Music only (no text is recognised), so hard to identify piece-breaks where these do not coincide with page-breaks.

What I'm trying to do

Use the musical content recognised by Aruspix as a means of confirming the identity (or close similarity - another discussion ...) of works against both the internal collection (items already in EMO) and external resources such as the RISM OPAC.

But first, there are some things we can do when parsing the Aruspix MEI to improve the accuracy of recognition slightly:

Heuristic corrections

Laurent Pugin's extension to MEI for Aruspix gives detailed layout info for each recognised glyph/symbol, so we can sometimes use these for contextual correction.

Bass clef shape:

A printed bass-clef at this period is often made up of a longa on the 4th line up, followed very closely by two minims, the first (often, but not always, with a short up-stem) in the top space, the second (stem down) in the next space down.

Aruspix recognises the vertically-aligned pair of minima glyphs as a bass clef; however, by this point it has already "recognised" the false "longa" glyph as a note, which we simply delete.

Sometimes a bass clef is like a reversed C followed by a pair of dots above and below the second line down:

Aruspix does not recognise the "reverse C" glyph as a bass clef, but it does recognise and insert at least one of the dots with no meaningful context.

Missed clefs:

We can improve matters on pages where at least the first clef has been recognised by adding an editorial clef (same as the previous one) where it is missing at the beginning of a line before calculating pitches on that line. (The reason we need to do this is that Aruspix otherwise assumes a default C1 (soprano) clef.)

Other frequent recognition problems

Accidentals

There is no obvious heuristic for improving recognition of sharp or flat signs. These are very variable in shape and, without extensive training (not really feasible over large collections) will be hard to recognise reliably without human intervention.

Dots aligned with staff lines

Some of the printers used a combined dot/line glyph which can look simply like a bulge in the staff-line rather than a separate symbol (it's unusually clear in this example). This, not surprisingly, can cause problems for Aruspix.

Generally speaking, the most serious errors made by Aruspix (like other OMR applications) tend to be ones that affect several following notes. This applies especially to clefs and accidentals (the latter particularly in "key-signatures").

Diatonic melodic strings

The Aruspix MEI gives the pitch of notes as letter-name and octave, like:

<note xml:id="m-f14db671-8250-410b-a2fb-be161a8e273a"

pname="b"

oct="4"

dur="minima" />

so it is trivial to extract note-pitch sequences for each page of music. (NB The pitches have to be corrected where we have heuristically changed a clef or "key-signature" - this is a bit tricky.)

In order to match incipits via the RISM OPAC (from which we can retrieve records using a simple URI scheme) we have to omit the octave, so we have a simple alphabetic string. Since, in general, phrases in Renaissance music do not continue across rests longer than a semiminima (crotchet), we insert line-breaks at each group of rests of total duration equal or greater to that of a minima.

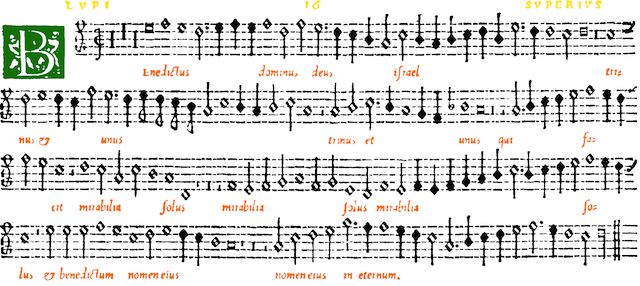

Example: K4c11_009_part_a:

The "RISM-string" we extract for this page is:

efedefefdfedcbacbabcdbeddcd

cdedcfededcbacbabca

abcbfgfbf

abcdefedfec

dcacbad

fefgafgcdfefefgabcbcdefedc

eeefecdedcba

acdegffedcabcdefedcd

RISM incipit searching

Now, as it happens, this page begins with the incipit of a work (Lupi's 'Benedictus'), and indeed, a search using the first 13 notes, "efedefefdfedc", on the RISM OPAC works beautifully. We find a manuscript copy immediately: Click here

But there is a problem. The "correct" length of RISM incipits is a very vague concept; it seems to be defined as whatever the cataloguer deems necessary to identify the work. This may be all well and good within a particular source or genre or period, but it most certainly is not good enough in the general case.

If we shorten the incipit search in RISM to "efedefef" (8 notes), we get more hits, of course, including another MS copy of Lupi's 'Benedictus': Click here

We do not find this second version using the longer incipit. So we cannot reliably and definitively identify works via the RISM OPAC with a single search, even when their incipits are present in RISM. We have to get the incipit length right, by magic, presumably.

Fortunately, RISM have released their database as Open Data in MARC XML format: (Link to RISM Open Data page). We have downloaded this data and can experiment with using an n-gram technique to deal with this issue, and maybe help with another that arises: note-pitch errors.

As the eagle-eyed reader will have spotted, the third line "abcbfgfbf" (corresponding to the middle of the second system of the example) contains two errors - the note "a" has twice been recognised as "f"; it should read "abcbagfba".

Approximate matching of melodic n-grams

We can use n-grams extracted from the page's RISM-string to cross-identify works (or at least find pages containing melodically-similar music) within our recognised EMO collection. In principle, there should be a maximal number of similarities between n-grams from pages containing the same music - and a lesser, but still high, number of similarities where the music is closely related (e.g. contains a quotation, as is frequently encountered in Renaissance music).

First attempts, using agrep, seem to work, but the task of analysing the vast amount of result-data that is produced has so far defeated me.