Supercomputing opportunities for musicological investigations

Supercomputing opportunities for musicological investigations

The Transforming Musicology project carried out a preliminary investigation of the viability of supercomputing for the various tools being used within the project. This blog post summarises what we found.

What follows is a brief discussion of some case studies and observations, and then a few points that we hope might be helpful to those considering undertaking such tasks in the future.

Our priority in this investigation has been to minimise the amount of developer time required to adapt pre-existing applications to a parallel processing system. This reflects our use of the clusters as a research and investigation tool. In a production environment, where heavy use is expected, then the engineering time required to adapt the tools becomes a more obviously worthwhile investment. Projects that use supercomputing clusters within a musicological context in this way include SIMSSA and Peachnote.

Can the investigation take advantage of parallel computation?

When multiple processes and pieces of hardware are available, it is desirable for tasks to be easily divided in such a way that there are few or, ideally, no dependencies between the parts. Where that division is easily and cleanly achievable, the problem is described as ‘embarrassingly parallel’.

Our early music research strand includes several examples of tasks that are embarrassingly parallel. In our paper for ISMIR 2016, we explored how the musical language of arrangements of vocal music for lute differs from that of idiomatic, ‘native’ lute compositions. To do this, we searched for instances of particular embellishment patterns across two large collections of lute music using an implementation of SIA(M)ESE (Wiggins, Lemström and Meredith, 2002).

Since the result of each query was not dependant on the result of any other query, the whole operation – which amounted to over 70,000 searches over a combined database of about 4,000 lute pieces – was easily divided up and used in a parallel-processing environment. Since then, we have considered how to record detailed information about the provenance, methods and results of these queries as they occur (this is work was presented the Music Encoding Conference in Tours in May 2017). Since this does not change the fundamentally parallel nature of the task, it would not affect the use of supercomputing resources.

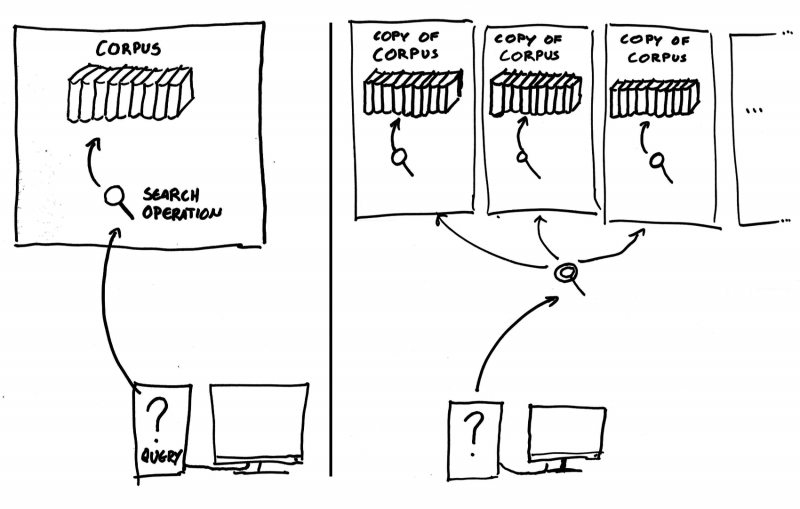

This sort of task, with many queries carried out over the same corpus, is quite common for document retrieval applications in many domains, and can work well in a supercomputing context – Peachnote n-gram searching is a publicly-visible example. Nonetheless, there can be problems. Each time that a process carried out a search of our lute music data, the whole corpus had to be loaded into memory. This carries with it two risks – firstly, there is an extra time requirement for loading and unloading the collection each time and, secondly, if multiple processes are operating on a system which divides a fixed amount of memory amongst them all, then the available memory can be exhausted. By contrast, if a single process runs many searches over the same corpus, then the data need only be loaded once. For a small corpus and a large number of processes, the benefits of the parallelisation can be expected to outweighed the costs.

Figure 1. Simplified illustration showing a risk of parallel computation. The left image shows a query being operated on by a single process. In the right-hand case, the task has been divided into parallel processes, but each must load and maintain a full copy of the corpus being searched.

In another investigation, this time involving audio, a similar issue was seen for the search tool audioDB, but here, the problem is more pronounced. Since audio files are larger than score files for similar amounts of music, audio corpora are generally much larger, and where each separate processing thread loads a complete copy into memory, the hardware demands grow hugely, whilst the time taken to initialise each search instance also becomes a prohibitively large part of the processing time. This places a severe limit on the extent to which the software can be used in supercomputing environments until such a time as the code can be refactored to avoid this issue, for example by allowing the memory containing the corpus to be shared.

These limitations described above arise largely because we were using software that we had developed for single-user, desktop-based operations. Many of the problems can be avoided by designing softwaree specifically with supercomputing approaches in mind. This was not the focus of this investigation.

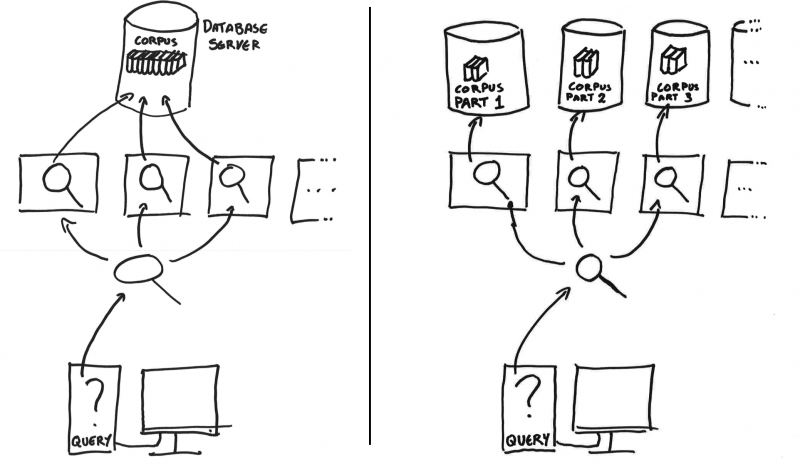

A standard database server provides one way to facilitate multi-user (or multi-process) retrieval. Parallel processes calling on a robust database can avoid many of the bottlenecks and memory issues that we have described above, as long as the parallelisation involves many small, simple queries. Where the query itself involves parallel tasks, some extra optimisation may be necessary.

For example, in searching a collection of automatically-transcribed pages of music, we search for multiple, overlapping sequences (n-grams), in the hope that some of these shorter extracts will be correctly transcribed and robust to musical variation, even where the longer form might not show. The large query generated is slow even in one instance. Turning a single search into a set of parallel queries is possible, and the simplest way to do this is to partition the database itself – breaking it up into more manageable chunks, querying each separately, and then merging the results.

Figure 2. Parallel computation using a single database to manage access to the corpus. On the left, all processes access a single corpus instance, reducing the load on each process. On the right, the database itself has been split up which, where the data supports it, can speed up queries.

Can judgement be accommodated in computational investigations

Our musicology of the social media thread has made heavy use of network analytics tools, where one of the most computer-intensive aspects is finding two-dimensional layouts for network graphs that best reflect the relationships between elements in the graph. If each node in a network graph is a musical work or an online user, then the closeness of nodes in the drawn image should reflect some sort of ‘similarity’, at least in terms of the aspects that the network represents.

Algorithms for graph layout are complex, slow and very difficult to parallelise, since the position of each node is dependent on the positions of its neighbours. Nonetheless, the process can use supercomputing resources to speed up the underlying calculations. What proved a greater challenge in our use of supercomputing resources, is that the process of choosing what to graph and what parameters are best involves repeated and frequent tweaking, with user input at every stage. This need for a user in the loop necessarily has an effect on the speed gains that can be achieved with such a system.

Summary and advice

Based on these investigations, we can suggest these key questions to those considering using supercomputing resources:

- How parallel is it? The ideal problem either requires lots of independent calculations or can be broken down into separate tasks that have little or no reliance on one another.

- What resources do the tools require? The ideal tools run for each task should either have small memory use or be able to share memory between processes. If the time to start up a tool is too great, then repeating operations in a process that is already running may be faster than running more processes, in which case, smaller numbers of parallel instances may be preferable.

- How much human intervention is desirable? Many operations that seem to be embarassingly parallel fit into a cyclical work flow that involves a researcher responding to the results of a process and adapting their investigation as a result. This sort of interaction can be hard to build in to a supercomputing paradigm.

- How slow is the operation? Some tasks can be completed by leaving them running overnight or over a weekend on a single, simple system and, once the have been done once, never need to be run again. In those cases, more complicated approaches may be unnecessary.

Musical tasks can often adapt well to supercomputing infrastructures although, as we have seen, how well tools may need to be adapted to make the conversion successfully. However, we should be aware of the dangers of ignoring users and focussing on a purely mechanistic view of musicology, and one without the iterative processes that we know form a large part of our research (c.f. Frans Wiering, ‘What is Digital Musicology and what can be expected from it?’, Workshop presentation, London, 2012). It is much easier to ignore the importance of user interaction when planning these adaptations, and this is an area that clearly needs more explicit exploration.

We hope that this has been a helpful overview. This is a large and complex topic, and neither the investigations conducted as part of Transforming Musicology nor the format of a block post allow us an exhaustive exploration.

If you’d like to talk to us about your own musicology research, and whether supercomputing might be applicable – or if you have already used it in a musicology context – do get in touch.