EMO catalogue and images: Where to go from here

EMO catalogue and images: Where to go from here

Tim has already discussed in a blog entry some of the treatment of our efforts to take the data provided by EMO, the Early Music Online project. In that post, Tim considers some of the issues relating to transcribing and comparing the musical content of the EMO images.

One of the issues that arises immediately is that when you transcribe the series of images that make up a source, you get a single musical object, that takes up an entire set of part books. That object is easily divided into graphical or typographical units — one can divide it by pages, systems (lines of music) or symbols — but the musical content should, ideally, be split into pieces and then vocal parts that belong to the same piece recombined to build a score.

Whilst the work alignment blog entry describes ways to work around this problem, this post attempts to address it directly. Broadly speaking, there are three approaches we could take to this problem:

- Fully automatic, based on the images, their transcriptions and the information in the catalogue

- Fully manual

- Some hybrid of the two

There are several ways in which the automatic method could be considered: transcribing the textual material with the music might help, whilst ornamental barlines and empty systems might be used as indicators of the ends of pieces. Many sources use ornamental initials at the start of a piece, and this too might help. One might even construct some sort of musical model for recognising opening gestures or recognise new pieces by the fresh musical material that appears (for instance, using statistical modelling along the lines of IDyOM).

At this stage, we are trying none of these. There are several reasons why not. Finding boundaries between pieces is the only part of the problem — we then need to associate voice parts with one another and the resulting score with a work title and, if possible, attribution. Since the catalogue is not quite of a quality or level of detail necessary to support that, manual intervention is necessary anyway.

Even given an ordered list of pieces, we also would need to know, for example, which pieces are omitted from a book because they are written for fewer voices, or which ones occur twice in a book because two parts are written into the same volume. For the EMO collection, we cannot even assume that pieces occur in the same order in all books, or that the order in which systems of a piece appear in a volume is the order in which it should be performed.

All this, and more, means that it is hard to imagine a fully-automatic approach being successful, or repaying the time spent designing and training the necessary software given an, initially at least, limited number of sources. Instead, we plan on developing a user-friendly application to support an almost fully manual process.

An interlude: instrumental music

Instrumental music does occur in the EMO collection and when it is for lute solo, it still falls nicely within our project scope. Here, though the problem of piece identification — and of metadata — can be slightly reduced by several factors:

- Howard Mayer Brown's "Instrumental music printed before 1600", which provides more detail than the British Library catalogue alone

- The fact that these are linear sources, in the sense that, since they are written for one player, they have one book, one part, and so progress through the contents once and then stop.

- There are fewer of them

Although these might also be arguments for an automated splitting, in fact, they have made manual work a lot easier and, thanks to the efforts of Reinier de Valk, we now have the ability to split the automatically-transcribed musical content of lute books into musical works.

Splitting vocal music

Initial materials

We start with the following:

- Images from EMO, with openings divided into pages

- The results of Optical Music Recognition (Aruspix), including the position of systems and symbols

- Catalogue information as MARC, including a single field that lists the contents of each source, as a title and attribution as given in the source

The catalogue inventories have been divided into piece entries in a partially-automatic process. Manual intervention was needed in cases where error or inconsistency in the catalogue itself meant that the division generated was incorrect.

The task

Each music-containing system on each unique page image (there are duplicates) needs to be covered by one or more blocks labelled with:

- A unique inventory item

- A voice

- A number representing the position of that block within that voice's performance of the piece (so that a singer would traverse from block 0 to block 1 and so forth)

In an ideal world, the block would be delimited by symbol number (as recognized by Aruspix), or a pixel position, but the former is vulnerable to changes if a source is reprocessed, and the latter is hard to implement simply. As yet, this is an unresolved issue.

The first stage of the process, then, is to run a script over the images, Aruspix output and EMO catalogue data, taking the necessary image geometry, staff locations and source information, whilst generating thumbnail images for the app.

The interface

The application is written in as pure a JavaScript/HTML form as possible, with a very light PHP/MySQL database process running on the server providing initial data and saving the results of editing.

This means that most of the work is done by the browser. This is an advantage in many ways, but also puts some strain on the client machine, since a source can have many images, which must currently all be kept in memory (and are, currently, all drawn more-or-less immediately).

The app takes the set of images, the list of voices used, if available, and the item titles. It then guesses an allocation, based on an assumption that each piece begins at the top of a page and that voices come in separate books which appear in a canonical order in the sequence of images. It also assumes that all images and all systems on them contain music.

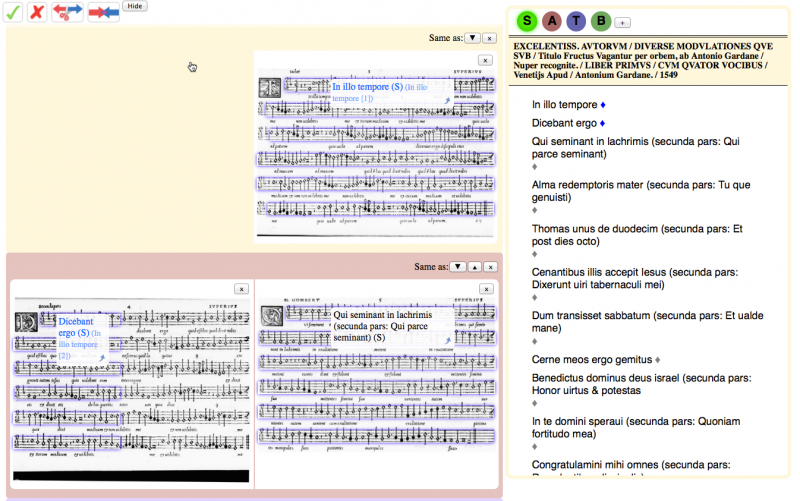

The result looks like this:

The page images are displayed on the left, with the systems (as identified by Aruspix) outlined in blue. A user can select a voice (top right) and then drag a title to the relevant page, causing the other titles to shift round it, creating a new guess of the allocation of pieces to pages.

Pages and systems can be split using the scissors button in the top bar and merged using the button next to it. The text of titles can be corrected by double clicking on them (this uses the relatively-new contentEditable attribute). Double clicking also makes buttons appear for splitting or removing items.

Whilst a list only allows for a flat structure for describing pieces, there are frequent instances, including in the screenshot above, where a hierarchy is required — movements in a suite or mass, sections in a movement, partes in a chanson. The interface here caters for one layer of hierarchy — dragging a diamond from one piece to another indicates that the two (or more) are part of the a single parent unit. More complex hierarchies are not currently possible.